In this post, I’ll provide a summary and some thoughts on the paper GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models. The authors from OpenAI, OpenResearch and UPenn argue GPTs (Generative Pre-trained Transformers, like GPT3 and GPT4) are General Purpose Technologies. The impact therefore will be pervasive. The paper discusses the potential impact of LLMs on the U.S. workforce. According to the researchers' findings, around 80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of LLMs, and about 19% of workers may see at least 50% of their tasks impacted. The authors also note that LLMs exhibit traits of general-purpose technologies, suggesting they could have considerable economic, social, and policy implications.

For a technology to become a General Purpose Technology, it has to meet 3 criteria

Improvement over time

Pervasiveness throughout the economy

Ability to spawn complementary innovations

Past examples of these General Purpose Technologies include:

Steam Engine: The invention of the steam engine in the 18th century was a major breakthrough that powered the Industrial Revolution. It was pervasive and led to significant improvements in various industries, from manufacturing to transportation.

Electricity: The advent of electricity in the late 19th century brought about dramatic changes in both domestic and industrial spheres. It led to the invention of a plethora of appliances and equipment, enhancing productivity and convenience.

Internal Combustion Engine: This technology was the driving force behind automobiles and significantly impacted transportation, facilitating the movement of goods and people over vast distances.

Semiconductor Technology: Semiconductors, the basis of modern electronics, have become ubiquitous in a range of devices from computers to smartphones. The continuous improvement of semiconductor technology (often referred to as Moore's Law) has profoundly shaped the digital age.

Internet: Arguably one of the most transformative GPTs, the internet has revolutionized communication, commerce, education, entertainment, and more. It has spawned numerous complementary technologies and platforms, including social media, e-commerce, and cloud computing.

I tend to agree with the authors of the paper that these generative large language models are going to be a General Purpose Technology. We of course won’t know who is right until the passage of time reveals how impactful and pervasive these technologies can be.

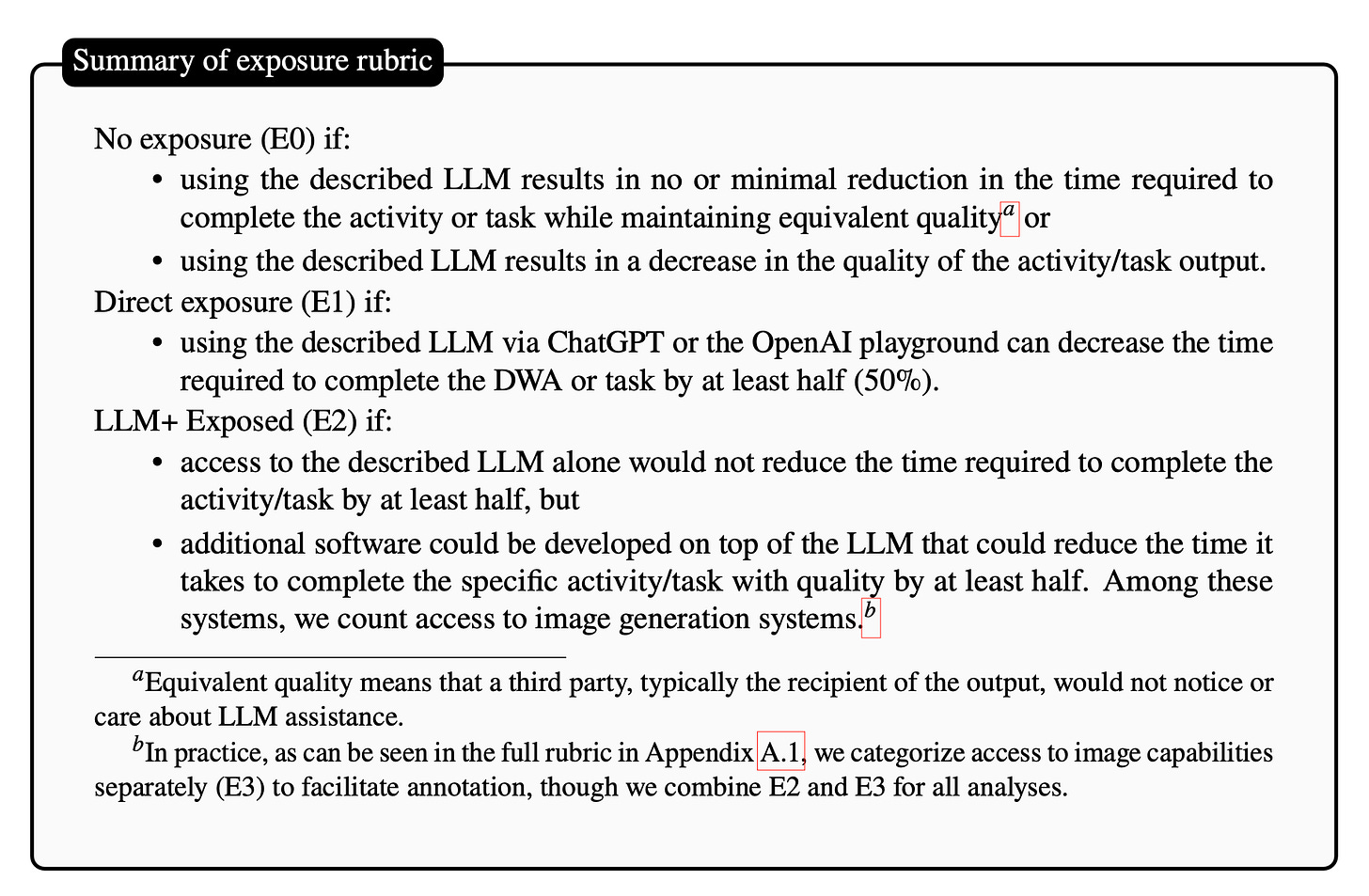

The authors of this paper use the O*NET 27.2 database, encompassing 1,016 occupations, each defined by respective Detailed Work Activities (DWAs) and tasks. They rely on two datasets containing 19,265 tasks and 2,087 DWAs. They introduce an "exposure rubric" to define and measure the potential impact of Language Learning Models (LLMs) on these tasks and DWAs. Exposure is measured by whether an LLM or LLM-empowered system could reduce the time taken to perform a specific task or DWA by at least 50% while maintaining equivalent quality. The exposure is classified into three categories: No exposure, Direct exposure (if LLM use can decrease the task time by at least half), and LLM+ Exposed (if additional software built on the LLM can reduce task completion time by half). They set the exposure threshold at a potential 50% reduction in time, acknowledging that real-world reductions might be lower.

Some of the findings of the paper include:

Higher Income jobs are more Exposed: The authors find that most occupations exhibit some degree of exposure to LLMs, with varying exposure levels across different types of work. Occupations with higher wages generally present with higher exposure, contrary to similar evaluations of overall exposure to machine learning. Roles heavily reliant on science and critical thinking skills show a negative correlation with exposure, while programming and writing skills are positively associated with LLM exposure.

Exposure by Industry: The paper shows that information processing industries exhibit high exposure, while manufacturing, agriculture, and mining demonstrate lower exposure.

Exposure by Skills: indicate that the importance of science and critical thinking skills are strongly negatively associated with exposure, suggesting that occupations requiring these skills are less likely to be impacted by current LLMs. Conversely, programming and writing skills show a strong positive association with exposure, implying that occupations involving these skills are more susceptible to being influenced by LLMs

I don’t think we should put too much stock in the exact percentages the paper cites on the percentage of jobs affected. We’re just in the early innings of LLMs impacting work. This paper took a task-based approach to evaluating every occupation exposure to language models. The authors acknowledge this as a weakness:

Validity of task-based framework. It is unclear to what extent occupations can be entirely broken down into tasks, and whether this approach systematically omits certain categories of skills or tasks that are tacitly required for competent performance of a job. Additionally, tasks can be composed of sub-tasks, some of which are more automatable than others. Some tasks may function as pre-cursor to other tasks, such that the completion of downstream tasks is dependent on precursor tasks. If indeed, the task-based breakdown is not a valid representation of how most work in an occupation is performed, our exposure analysis would largely be invalidated.

I agree using a task based approach likely doesn’t cover every responsibility of a job. However it is a good starting point for discussions on which jobs are going to be more affected than others. And directionally I think the impacted jobs are correct. White-collar, knowledge-work will be impacted more by LLMs whereas blue-collar more manual-oriented work will be less so.

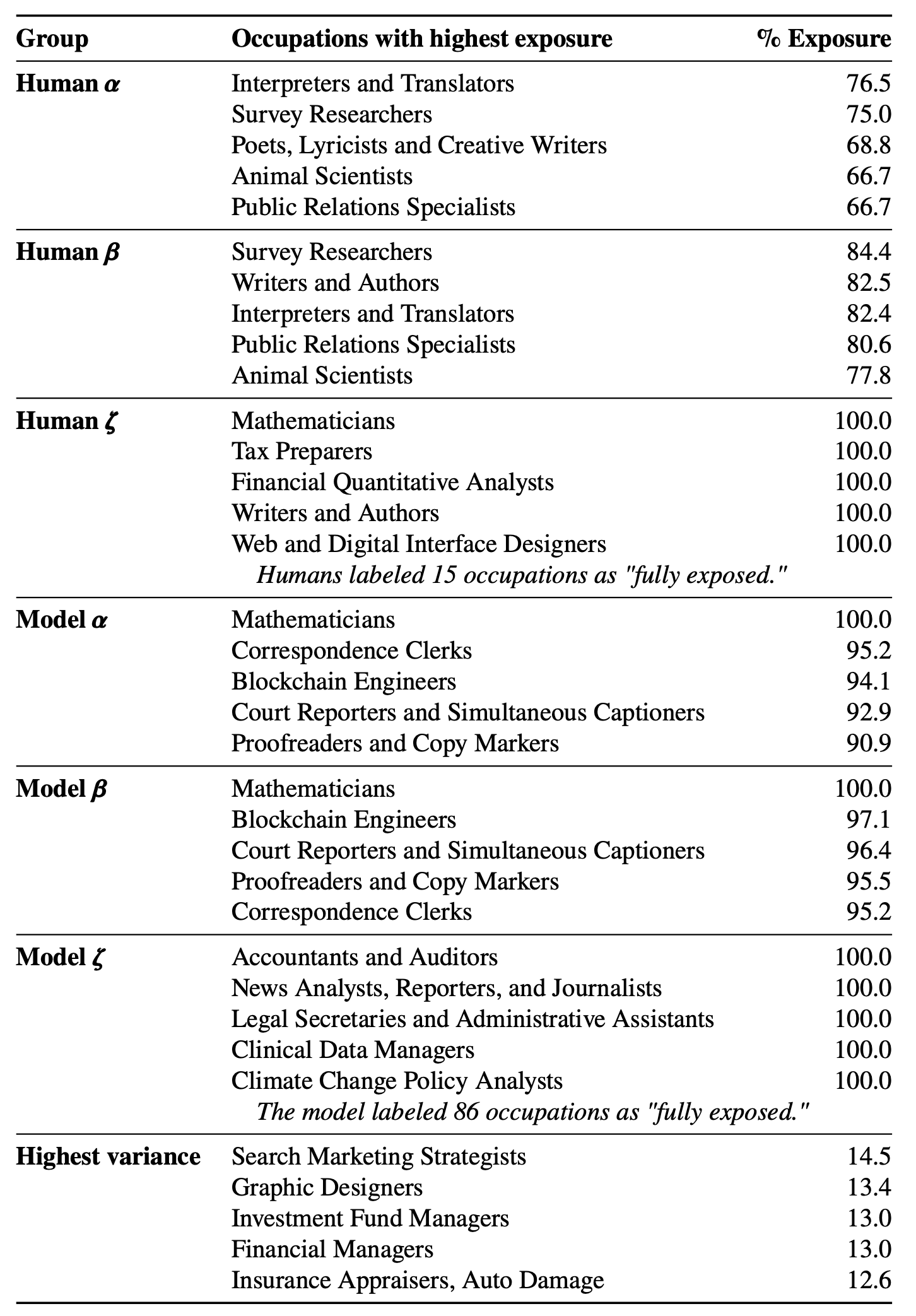

The occupations with the most exposure are below:

Mathematicians, tax preparers, writers/authors, legal secretaries, administrative assistants, proofreaders are all occupations that are highly exposed to LLMs based on either human labelers or by GPT-4.

And these are a sample of occupations from the paper that have no exposure because they have no exposed tasks:

Agricultural Equipment Operators

Athletes and Sports Competitors

Cooks, Short Order

Helpers–Painters, Paperhangers, Plasterers, and Stucco Masons Helpers

Slaughterers and Meat Packers

Stonemasons

Tapers

Tire Repairers and Changers

Wellhead Pumpers

It’s difficult to say that an occupation has no exposure to AI. Even very manual jobs like picking fruit can be automated. For example these are drones using AI to identify apples, identify the ripe apples and then pick them with a mechanical arm:

The emergence of GPTs, like LLMs, undeniably heralds a new chapter in our socio-economic narrative. In light of the potential impacts laid out in the paper "GPTs are GPTs", it becomes vital to question, plan, and prepare for the transformative tide these technologies might bring. While we mustn't overestimate the precise predictions the paper makes, it offers a springboard for essential discussions regarding the roles most likely to be influenced.

The future might appear intimidating or exciting, depending on your perspective. One thing is for sure - LLMs and GPTs are here to stay, and their potential to affect our working lives is significant. As we continue to explore this frontier, let's remember the power of adaptability and resilience. After all, every previous General Purpose Technology - from the steam engine to the internet - has redefined the work landscape, pushing us to evolve, adapt, and transform for the better. The same will likely hold true for LLMs and GPTs. Until then, let's keep the conversation going, and let's keep asking the right questions.